Abstract

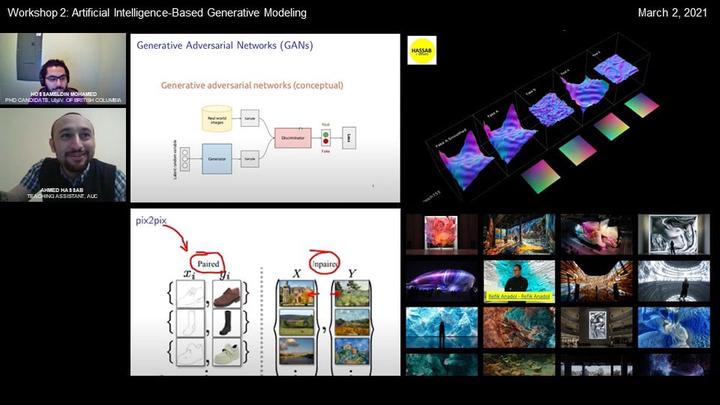

Recent developments in deep learning algorithms such as generative adversarial networks (GANs) (Goodfellow et. al. 2014) has created a paradigm shift in architectural generative design. Advantages of GANs over traditional generative algorithms include the ability to generate and manipulate infinite number of unique surfaces. Training of such models include feeding a large library of surfaces into the network, the model then learns the important patterns and features of the training data to generate realistic examples. The model can be divided into a generator and a discriminator. The generator objective is to learn how to generate representations of the input training examples that can “deceive” the discriminator. The discriminator is a universal function approximator that outputs a probability of an input surface to be real or fake. As more and more training epochs are passed, a minimax loss function is minimized that allows for the generator to create surfaces that are close as possible to the real ones, and the discriminator to not be able to differentiate between real and fake images. In addition to the vanilla GANs, which has been applied in a limited scale in recent research (Nauata et. al. 2020), in this workshop new synthetic versions of surfaces with specific modifications are presented using CycleGANs and pix2pix translation (Zhu et. al. 2017). The training data are prepared using an augmentor grasshopper script, generating large numbers of two types of surfaces (real A, and real B). The input pairs are used to generate new synthetic surfaces (fake A, and fake B), that have new manipulated features. The training of CycleGANs is more complex and challenging requiring artistic authoring (Zhu et. al. 2017). The program of the workshop will include the steps for data pre-processing, data post-processing and live coding of generative adversarial networks and CycleGANs on grasshopper. The procedure includes the general framework that can be applied to any generation process. Description of the software and computational requirements including required processing and graphics cards, data quality and formats, and harnessing the power of computational platforms such as google cloud or amazon web services.

References

- Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., … & Bengio, Y. (2014). Generative adversarial nets. In Advances in neural information processing systems (pp. 2672-2680).

- Nauata, N., Chang, K. H., Cheng, C. Y., Mori, G., & Furukawa, Y. (2020). House-GAN: Relational Generative Adversarial Networks for Graph-constrained House Layout Generation. arXiv preprint arXiv:2003.06988.

- Zhu, J. Y., Park, T., Isola, P., & Efros, A. A. (2017). Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision (pp. 2223-2232).